I ran across a problem this week while putting in the finishing touches of a data access layer for a series of WCF services. These services needed to pull information from an IBMi (AS/400) "database" (e.g actually the pre-1980's file system). I won't go too far into the problem, but the need was I had to cache information regarding what environment and/or library to invoke the program running on the IBMi. This information I decided was likely best stored in a type Dictionary<string, string> - as I could look up the name of the program and easily retrieve the library that I needed be in to invoke the program.

So then, how best to cache this information between successive calls to a WCF service?

Well, as it turns out the IIS worker process in version 5.1 and greater is quite handy. So long as the application pool isn't recycled either from a time-out or by manually recycling the pool the object you define as your ServiceContract will remain in memory. Meaning any object bearing the static definition within this object will remain, well, static.

So my first thought on solving this problem was to simply define a Dictionary<string, string> object as a private static member of my ServiceContract class, load it up, and then use it within any object that my ServiceContract class needs into order to its work. Basically something like this.

public class Service1 : IService1

{

private static Dictionary<string, string> myDictionary = new Dictionary<string, string>();

public string GetData(int value)

{

Service1.myDictionary.Add(myDictionary.Count.ToString(), value.ToString());

return string.Format("You entered: {0}", value);

}

}

See any problems yet?

If your guess was that in order to use the static "myDictionary" object I would need to carry the object to each and every object that requires access to the list of the items within the dictionary then you can immediately see my folly. If you aren't planning on a having many (or any) helper objects then this really isn't a big deal. The problem I faced was that the ServiceContract object of the WCF services I was coding for was about 8 or 9 layers above my data access layer. I was really going to be popular changing all the objects between the top most ServiceContract and my object - especially since ALL of the objects didn't really care about or need the contents of the Dictionary class.

Enter the MemoryCache

MemoryCache is an object located in the System.Runtime.Caching assembly. This object was added a few years ago with the release of .NET 4.0. It never really caught my attention until this problem came up - but essential it is a way to store away any objects you might need someplace else. Or put another way, if you are an old C programmer (like me), it is a way you can tuck away global variables (or objects in this case) for reuse in other places in your application. The API for this object can be found

here. Also a quick search on this object will provide some information and other sample code on its use. The purpose here really isn't to describe the API but rather to provide a practical example of its use.

So on we go with my first demo project that began using the MemoryCache object. My first set of changes really just swapped out the Dictionary object with the MemoryCache object. While I didn't solve my problem identified above, this step provided me with some understand of how this object worked as I was successful in creating, storing, retrieving, and updating the Dictionary object with successive calls to my test WCF service. Below outlines the changes I made to my "GetData" method.

public class Service1 : IService1

{

private static ObjectCache myCache = MemoryCache.Default;

public string GetData(int value)

{

Dictionary<string, string> myDictionary = (Dictionary<string, string>)Service1.myCache.Get("ALIST");

if ( myDictionary==null )

{

CacheItemPolicy policy = new CacheItemPolicy();

policy.Priority = CacheItePolicyPriority.Default;

myDictionary = new Dictionary<string,string>();

Service1.myCache.Set("ALIST", myDictionary, policy);

}

myDictionary.Add(myDictionary.Count.ToString(), value.ToString());

return string.Format("OK");

}

}

I also added a new method that retrieved a complete list of Dictionary items to the client so I could keep checking if the MemoryCache (and its embedded Dictionary object) would retain the information I needed to keep around.

public List<Avalue> GetAllCalls()

{

List<Avalue> values = new List<Avalue>();

Dictionary<string, string> myDictionary = (Dictionary<string, string>)Service1.myCache.Get("ALIST");

if ( myDictionary != null)

{

foreach(var pair in myDictionary)

{

Avalue aValue = new Avalue() { CallTime = pair.Key, CallValue = pari.Value };

values.Add(aValue);

}

}

return values;

}

So once this second version of my prototype was working I went to see if another object, which would be put in and out scope during another method call of my service, could get a handle to the same Dictionary object and update it for me. If this could work then I could avoid having to change all the objects between my data access layer and the ServiceContract object.

In order to test this I created another object within the WCF project called "DoSomething" which is defined below.

public class DoSomething

{

private ObjectCache doSomethingCache;

public DoSomething()

{

doSomethingCache = MemoryCache.Default;

}

public void DoIt(int value)

{

Dictionary<string, string> myDictionary = (Dictionary<string, string>)doSomethingCache.Get("ALIST");

if ( myDictionary!=null )

{

myDictionary.Add("DoSomething " + myDictionary.Count.ToString(), "DoIt" + value.ToString());

}

}

}

You'll notice that within the constructor of this object it is obtaining a handle to the MemoryCache object. And then it uses this handle, within the DoIt method, to pull out a Dictionary object where it then adds an entry. You'll also notice that the handle to the MemoryCache goes out scope along with lifespan of this object.

The final version of my service adds a new method called SetNewItem. This method creates a DoSomething object, calls the DoIt method and returns, causing the DoSomething object to go out of scope and eventually get picked for garbage collections.

public string SetNewItem(int value)

{

DoSomething something = new DoSomething();

something.DoIt(value);

return ( "OK");

}

You then see that I didn't need to pass around the Dictionary or the MemoryCache object and that the DoSomething object appears to be capable of obtaining a handle of Dictionary object.

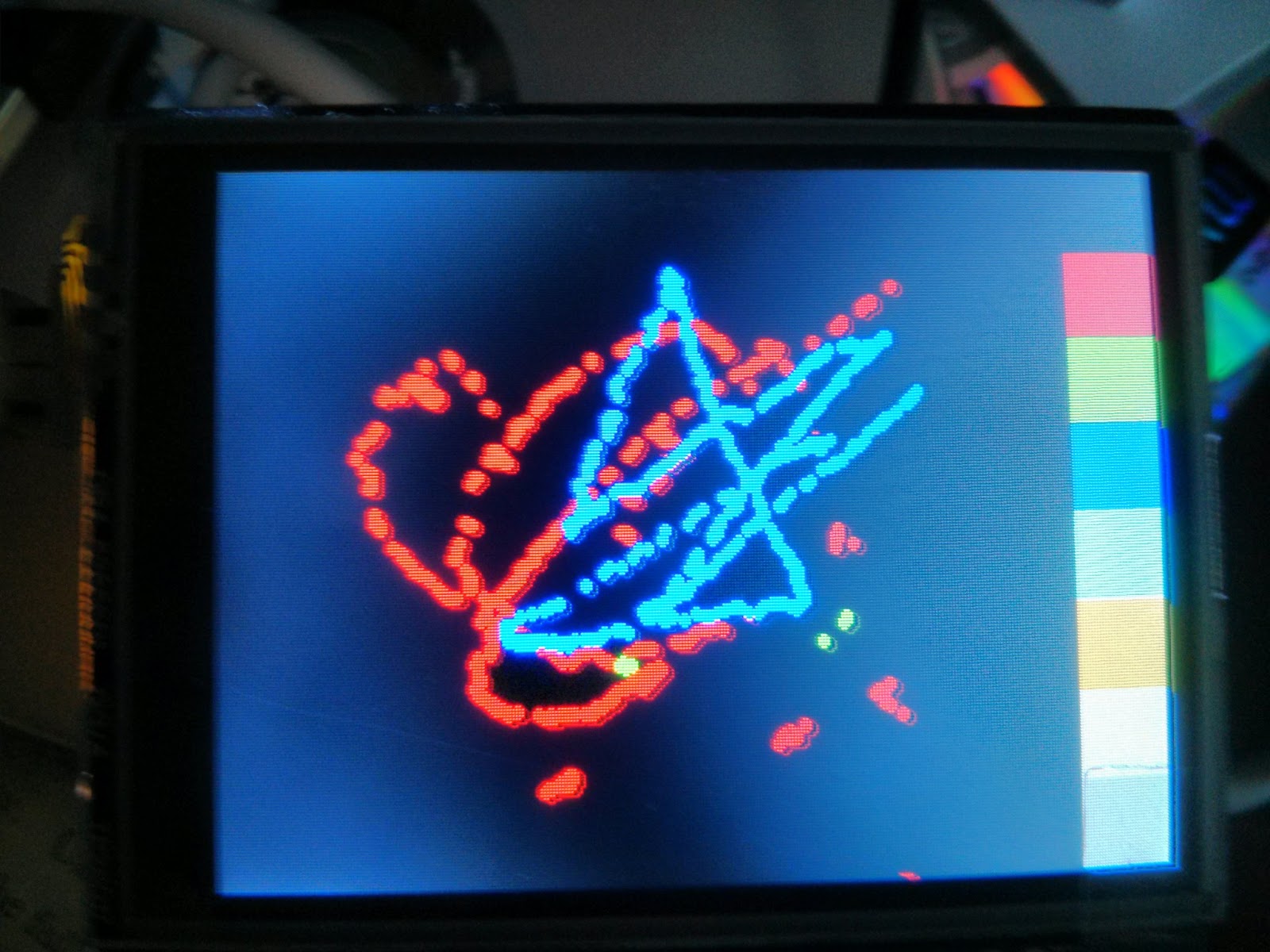

So, the question is did it actually work? Did the MemoryCache manage to stay around between calls? Was the Dictionary object populated correctly? The answer if is course yes. Below is a screen shot of the WinForms application which calls the Service1 web service. I invoked the GetData method a few times as well as the SetNewItem method. Each of these calls were given a random number between 0 and 42 by the client. After calling these methods a number of times I requested a full list of the contents of the Dictionary object to display within a list box on the screen.

As illustrated above you see can that the Dictionary object is alive and providing me a cached list of items that I can pull from pretty much any place within my running process. Once the application pool was recycled I pulled down the list again. However, because the pool was recycled there were no more entries in the Dictionary.